- Introduction

- Before you start

- Design and architecture

- System requirements

- Installation

- Upgrading Barman

- Configuration

- Setup of a new server in Barman

- General commands

- Server commands

- Backup commands

- Features in detail

- Barman client utilities (

barman-cli) - Barman client utilities for the Cloud (

barman-cli-cloud) - Troubleshooting

- The Barman project

Barman (Backup and Recovery Manager) is an open-source administration tool for disaster recovery of PostgreSQL servers written in Python. It allows your organisation to perform remote backups of multiple servers in business critical environments to reduce risk and help DBAs during the recovery phase.

Barman is distributed under GNU GPL 3 and maintained by EnterpriseDB, a platinum sponsor of the PostgreSQL project.

IMPORTANT: This manual assumes that you are familiar with theoretical disaster recovery concepts, and that you have a grasp of PostgreSQL fundamentals in terms of physical backup and disaster recovery. See section "Before you start" below for details.

Introduction

In a perfect world, there would be no need for a backup. However, it is important, especially in business environments, to be prepared for when the "unexpected" happens. In a database scenario, the unexpected could take any of the following forms:

- data corruption

- system failure (including hardware failure)

- human error

- natural disaster

In such cases, any ICT manager or DBA should be able to fix the incident and recover the database in the shortest time possible. We normally refer to this discipline as disaster recovery, and more broadly business continuity.

Within business continuity, it is important to familiarise yourself with two fundamental metrics, as defined by Wikipedia:

- Recovery Point Objective (RPO): "maximum targeted period in which data might be lost from an IT service due to a major incident"

- Recovery Time Objective (RTO): "the targeted duration of time and a service level within which a business process must be restored after a disaster (or disruption) in order to avoid unacceptable consequences associated with a break in business continuity"

In a few words, RPO represents the maximum amount of data you can afford to lose, while RTO represents the maximum down-time you can afford for your service.

Understandably, we all want RPO=0 ("zero data loss") and RTO=0 (zero down-time, utopia) - even if it is our grandmothers's recipe website. In reality, a careful cost analysis phase allows you to determine your business continuity requirements.

Fortunately, with an open source stack composed of Barman and PostgreSQL, you can achieve RPO=0 thanks to synchronous streaming replication. RTO is more the focus of a High Availability solution, like repmgr. Therefore, by integrating Barman and repmgr, you can dramatically reduce RTO to nearly zero.

Based on our experience at EnterpriseDB, we can confirm that PostgreSQL open source clusters with Barman and repmgr can easily achieve more than 99.99% uptime over a year, if properly configured and monitored.

In any case, it is important for us to emphasise more on cultural aspects related to disaster recovery, rather than the actual tools. Tools without human beings are useless.

Our mission with Barman is to promote a culture of disaster recovery that:

- focuses on backup procedures

- focuses even more on recovery procedures

- relies on education and training on strong theoretical and practical concepts of PostgreSQL's crash recovery, backup, Point-In-Time-Recovery, and replication for your team members

- promotes testing your backups (only a backup that is tested can be considered to be valid), either manually or automatically (be creative with Barman's hook scripts!)

- fosters regular practice of recovery procedures, by all members of your devops team (yes, developers too, not just system administrators and DBAs)

- solicits to regularly scheduled drills and disaster recovery simulations with the team every 3-6 months

- relies on continuous monitoring of PostgreSQL and Barman, and that is able to promptly identify any anomalies

Moreover, do everything you can to prepare yourself and your team for when the disaster happens (yes, when), because when it happens:

- It is going to be a Friday evening, most likely right when you are about to leave the office.

- It is going to be when you are on holiday (right in the middle of your cruise around the world) and somebody else has to deal with it.

- It is certainly going to be stressful.

- You will regret not being sure that the last available backup is valid.

- Unless you know how long it approximately takes to recover, every second will seem like forever.

Be prepared, don't be scared.

In 2011, with these goals in mind, 2ndQuadrant started the development of Barman, now one of the most used backup tools for PostgreSQL. Barman is an acronym for "Backup and Recovery Manager".

Currently, Barman works only on Linux and Unix operating systems.

Before you start

Before you start using Barman, it is fundamental that you get familiar with PostgreSQL and the concepts around physical backups, Point-In-Time-Recovery and replication, such as base backups, WAL archiving, etc.

Below you can find a non exhaustive list of resources that we recommend for you to read:

- PostgreSQL documentation:

- Book: PostgreSQL 10 Administration Cookbook

Professional training on these topics is another effective way of learning these concepts. At any time of the year you can find many courses available all over the world, delivered by PostgreSQL companies such as EnterpriseDB.

Design and architecture

Where to install Barman

One of the foundations of Barman is the ability to operate remotely from the database server, via the network.

Theoretically, you could have your Barman server located in a data centre in another part of the world, thousands of miles away from your PostgreSQL server. Realistically, you do not want your Barman server to be too far from your PostgreSQL server, so that both backup and recovery times are kept under control.

Even though there is no "one size fits all" way to setup Barman, there are a couple of recommendations that we suggest you abide by, in particular:

- Install Barman on a dedicated server

- Do not share the same storage with your PostgreSQL server

- Integrate Barman with your monitoring infrastructure 2

- Test everything before you deploy it to production

A reasonable way to start modelling your disaster recovery architecture is to:

- design a couple of possible architectures in respect to PostgreSQL and Barman, such as:

- same data centre

- different data centre in the same metropolitan area

- different data centre

- elaborate the pros and the cons of each hypothesis

- evaluate the single points of failure (SPOF) of your system, with cost-benefit analysis

- make your decision and implement the initial solution

Having said this, a very common setup for Barman is to be installed in the same data centre where your PostgreSQL servers are. In this case, the single point of failure is the data centre. Fortunately, the impact of such a SPOF can be alleviated thanks to two features that Barman provides to increase the number of backup tiers:

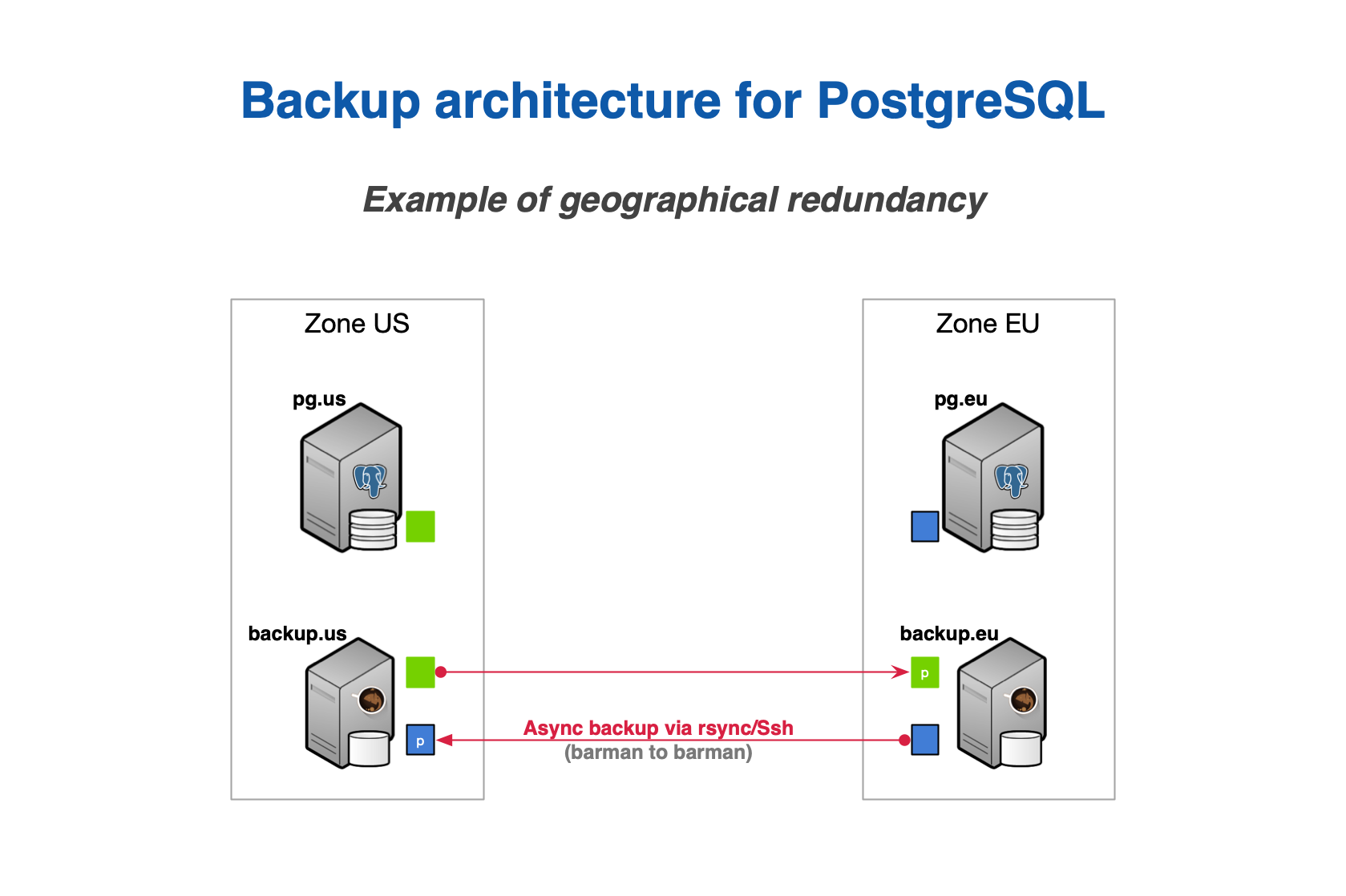

- geographical redundancy (introduced in Barman 2.6)

- hook scripts

With geographical redundancy, you can rely on a Barman instance that is located in a different data centre/availability zone to synchronise the entire content of the source Barman server. There's more: given that geo-redundancy can be configured in Barman not only at global level, but also at server level, you can create hybrid installations of Barman where some servers are directly connected to the local PostgreSQL servers, and others are backing up subsets of different Barman installations (cross-site backup). Figure below shows two availability zones (one in Europe and one in the US), each with a primary PostgreSQL server that is backed up in a local Barman installation, and relayed on the other Barman server (defined as passive) for multi-tier backup via rsync/SSH. Further information on geo-redundancy is available in the specific section.

Thanks to hook scripts instead, backups of Barman can be exported on different media, such as tape via tar, or locations, like an S3 bucket in the Amazon cloud.

Remember that no decision is forever. You can start this way and adapt over time to the solution that suits you best. However, try and keep it simple to start with.

One Barman, many PostgreSQL servers

Another relevant feature that was first introduced by Barman is support for multiple servers. Barman can store backup data coming from multiple PostgreSQL instances, even with different versions, in a centralised way. 3

As a result, you can model complex disaster recovery architectures, forming a "star schema", where PostgreSQL servers rotate around a central Barman server.

Every architecture makes sense in its own way. Choose the one that resonates with you, and most importantly, the one you trust, based on real experimentation and testing.

From this point forward, for the sake of simplicity, this guide will assume a basic architecture:

- one PostgreSQL instance (with host name

pg) - one backup server with Barman (with host name

backup)

Streaming backup vs rsync/SSH

Barman is able to take backups using either Rsync, which uses SSH as a transport mechanism, or pg_basebackup, which uses PostgreSQL's streaming replication protocol.

Choosing one of these two methods is a decision you will need to make, however for general usage we recommend using streaming replication for all currently supported versions of PostgreSQL.

IMPORTANT: Because Barman transparently makes use of

pg_basebackup, features such as parallel backup are currently not available. In this case, bandwidth limitation has some restrictions - compared to the traditional method viarsync.

Backup using rsync/SSH is recommended in cases where pg_basebackup limitations pose an issue for you.

The reason why we recommend streaming backup is that, based on our experience, it is easier to setup than the traditional one. Also, streaming backup allows you to backup a PostgreSQL server on Windows4, and makes life easier when working with Docker.

The Barman WAL archive

Recovering a PostgreSQL backup relies on replaying transaction logs (also known as xlog or WAL files). It is therefore essential that WAL files are stored by Barman alongside the base backups so that they are available at recovery time. This can be achieved using either WAL streaming or standard WAL archiving to copy WALs into Barman's WAL archive.

WAL streaming involves streaming WAL files from the PostgreSQL server with pg_receivewal using replication slots. WAL streaming is able to reduce the risk of data loss, bringing RPO down to near zero values. It is also possible to add Barman as a synchronous WAL receiver in your PostgreSQL cluster and achieve zero data loss (RPO=0).

Barman also supports standard WAL file archiving which is achieved using PostgreSQL's archive_command (either via rsync/SSH, or via barman-wal-archive from the barman-cli package). With this method, WAL files are archived only when PostgreSQL switches to a new WAL file. To keep it simple this normally happens every 16MB worth of data changes.

It is required that one of WAL streaming or WAL archiving is configured. It is optionally possible to configure both WAL streaming and standard WAL archiving - in such cases Barman will automatically de-duplicate incoming WALs. This provides a fallback mechanism so that WALs are still copied to Barman's archive in the event that WAL streaming fails.

For general usage we recommend configuring WAL streaming only.

NOTE: Previous versions of Barman recommended that both WAL archiving and WAL streaming were used. This was because PostreSQL versions older than 9.4 did not support replication slots and therefore WAL streaming alone could not guarantee all WALs would be safely stored in Barman's WAL archive. Since all supported versions of PostgreSQL now have replication slots it is sufficient to configure only WAL streaming.

Two typical scenarios for backups

In order to make life easier for you, below we summarise the two most typical scenarios for a given PostgreSQL server in Barman.

Bear in mind that this is a decision that you must make for every single server that you decide to back up with Barman. This means that you can have heterogeneous setups within the same installation.

As mentioned before, we will only worry about the PostgreSQL server (pg) and the Barman server (backup). However, in real life, your architecture will most likely contain other technologies such as repmgr, pgBouncer, Nagios/Icinga, and so on.

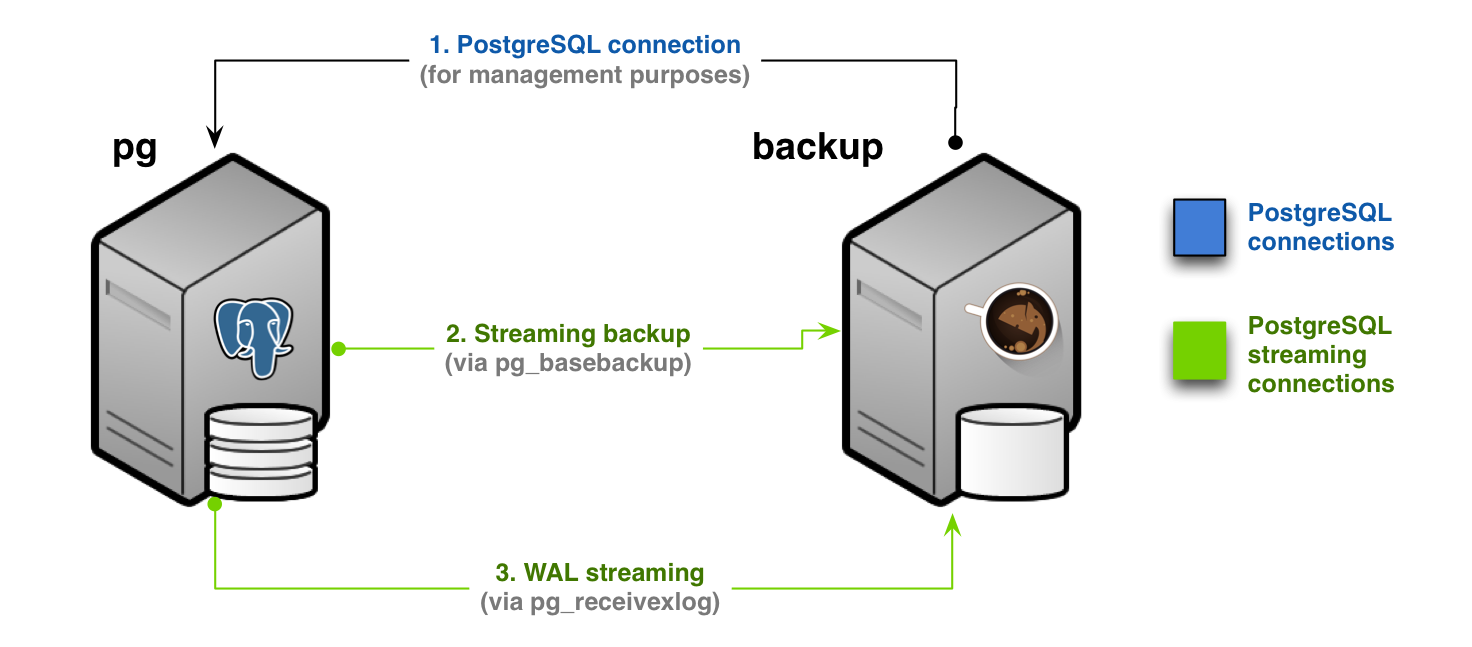

Scenario 1: Backup via streaming protocol

A streaming backup installation is recommended for most use cases - see figure below.

In this scenario, you will need to configure:

- a standard connection to PostgreSQL, for management, coordination, and monitoring purposes

- a streaming replication connection that will be used by both

pg_basebackup(for base backup operations) andpg_receivewal(for WAL streaming)

In Barman's terminology this setup is known as streaming-only setup as it does not use an SSH connection for backup and archiving operations. This is particularly suitable and extremely practical for Docker environments.

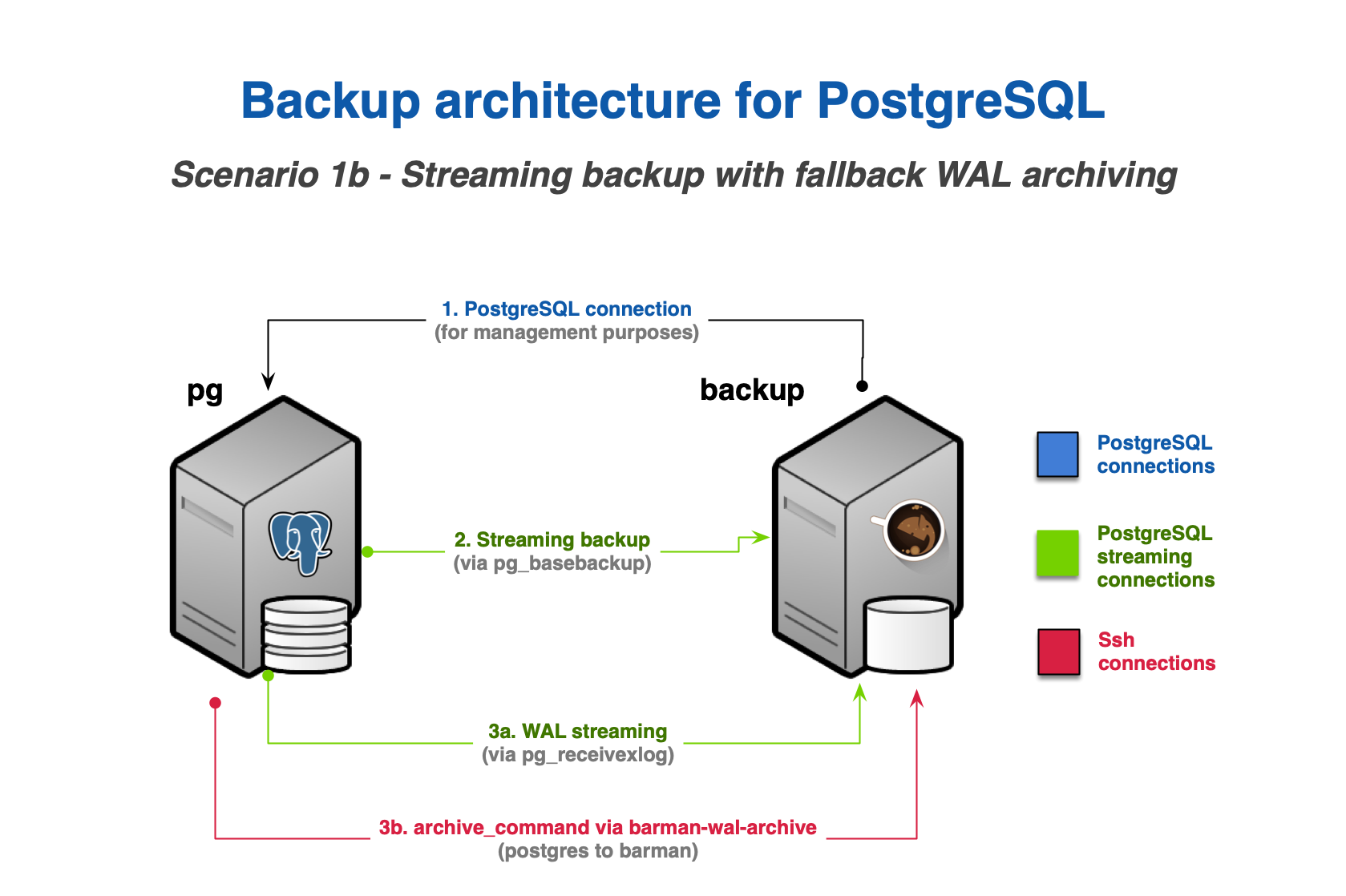

As discussed in "The Barman WAL archive", you can configure WAL archiving via SSH in addition to WAL streaming - see figure below.

WAL archiving via SSH requires:

- an additional SSH connection that allows the

postgresuser on the PostgreSQL server to connect asbarmanuser on the Barman server - the

archive_commandin PostgreSQL be configured to ship WAL files to Barman

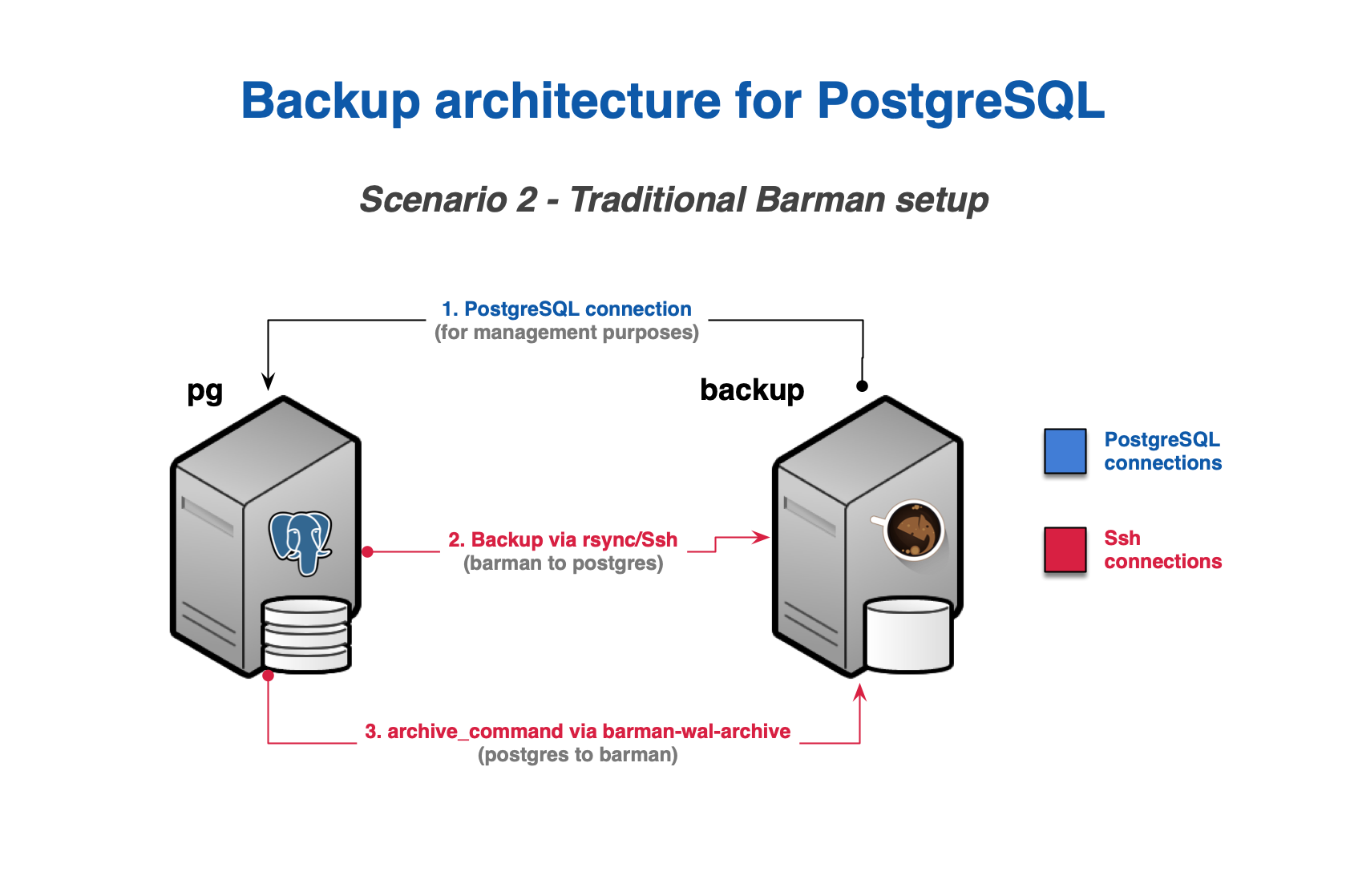

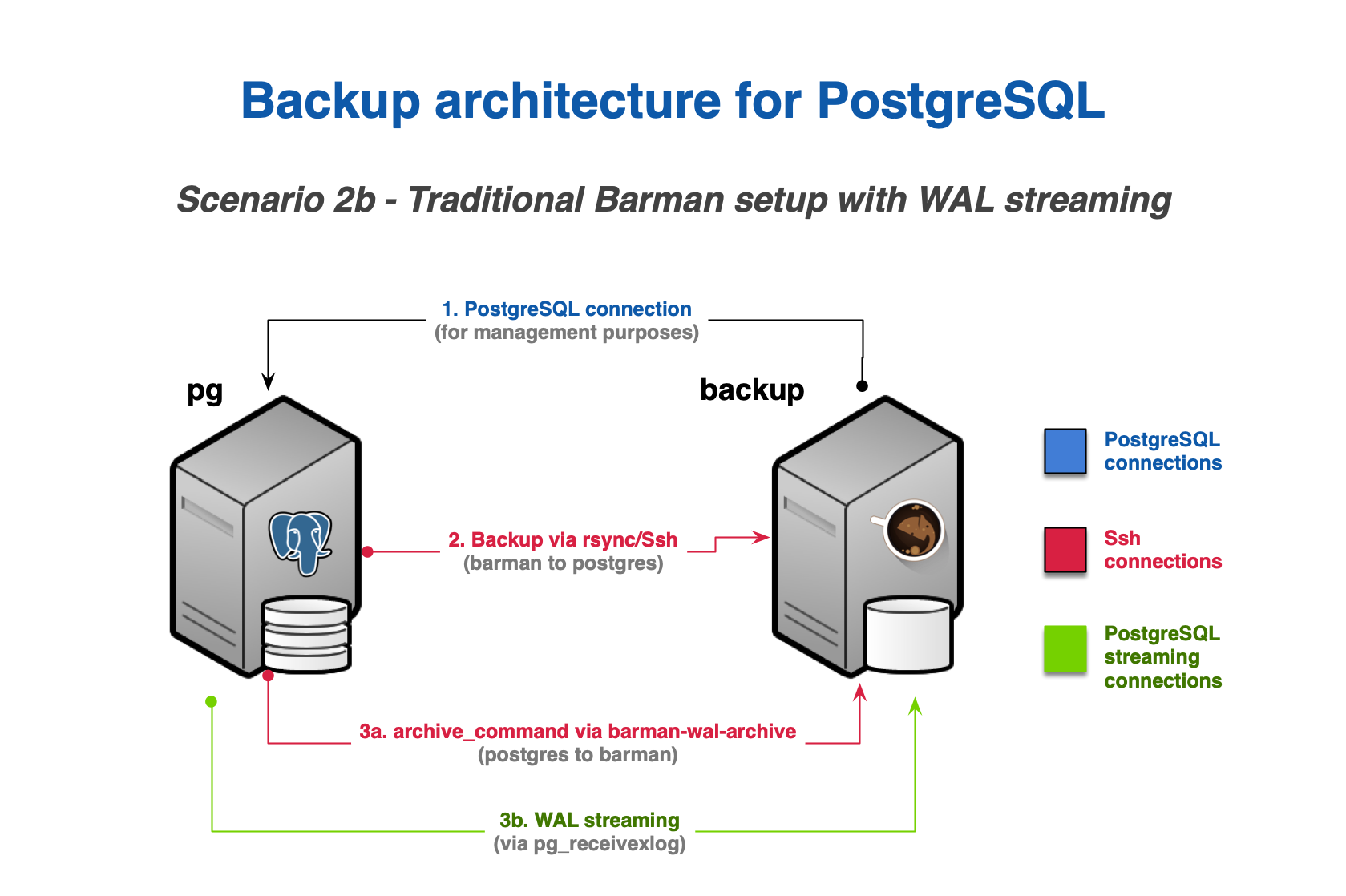

Scenario 2: Backup via rsync/SSH

An rsync/SSH backup installation is required for cases where the following features are required:

- file-level incremental backup

- parallel backup

- finer control of bandwidth usage, including on a per-tablespace basis

In this scenario, you will need to configure:

- a standard connection to PostgreSQL for management, coordination, and monitoring purposes

- an SSH connection for base backup operations to be used by

rsyncthat allows thebarmanuser on the Barman server to connect aspostgresuser on the PostgreSQL server - an SSH connection for WAL archiving to be used by the

archive_commandin PostgreSQL and that allows thepostgresuser on the PostgreSQL server to connect asbarmanuser on the Barman server

As an alternative to configuring WAL archiving in step 3, you can instead configure WAL streaming as described in Scenario 1. This will use a streaming replication connection instead of archive_command and significantly reduce RPO. As with Scenario 1 it is also possible to configure both WAL streaming and WAL archiving as shown in figure below.

System requirements

- Linux/Unix

- Python >= 3.6

- Python modules:

- argcomplete (optional)

- psycopg2 >= 2.4.2

- python-dateutil

- setuptools

- PostgreSQL >= 10 (next version will require PostgreSQL >= 11)

- rsync >= 3.1.0 (optional)

IMPORTANT: Users of RedHat Enterprise Linux, CentOS and Scientific Linux are required to install the Extra Packages Enterprise Linux (EPEL) repository.

NOTE: Support for Python 2.6 and 3.5 are discontinued. Support for Python 2.7 is limited to Barman 3.4.X version and will receive only bugfixes. It will be discontinued in the near future. Support for Python 3.6 will be discontinued in future releases. Support for PostgreSQL < 10 is discontinued since Barman 3.0.0. Support for PostgreSQL 10 will be discontinued after Barman 3.5.0.

Requirements for backup

The most critical requirement for a Barman server is the amount of disk space available. You are recommended to plan the required disk space based on the size of the cluster, number of WAL files generated per day, frequency of backups, and retention policies.

Barman developers regularly test Barman with XFS and ext4. Like PostgreSQL, Barman does nothing special for NFS. The following points are required for safely using Barman with NFS:

- The

barman_lock_directoryshould be on a non-network filesystem. - Use version 4 of the NFS protocol.

- The file system must be mounted using the hard and synchronous options (

hard,sync).

Requirements for recovery

Barman allows you to recover a PostgreSQL instance either locally (where Barman resides) or remotely (on a separate server).

Remote recovery is definitely the most common way to restore a PostgreSQL server with Barman.

Either way, the same requirements for PostgreSQL's Log shipping and Point-In-Time-Recovery apply:

- identical hardware architecture

- identical major version of PostgreSQL

In general, it is highly recommended to create recovery environments that are as similar as possible, if not identical, to the original server, because they are easier to maintain. For example, we suggest that you use the same operating system, the same PostgreSQL version, the same disk layouts, and so on.

Additionally, dedicated recovery environments for each PostgreSQL server, even on demand, allows you to nurture the disaster recovery culture in your team. You can be prepared for when something unexpected happens by practising recovery operations and becoming familiar with them.

Based on our experience, designated recovery environments reduce the impact of stress in real failure situations, and therefore increase the effectiveness of recovery operations.

Finally, it is important that time is synchronised between the servers, using NTP for example.

Installation

Official packages for Barman are distributed by EnterpriseDB through repositories listed on the Barman downloads page.

These packages use the default python3 version provided by the target operating system. If an alternative python3 version is required then you will need to install Barman from source.

IMPORTANT: The recommended way to install Barman is by using the available packages for your GNU/Linux distribution.

Installation on Red Hat Enterprise Linux (RHEL) and RHEL-based systems using RPM packages

Barman can be installed using RPM packages on RHEL8 and RHEL7 systems and the identical versions of RHEL derivatives AlmaLinux, Oracle Linux, and Rocky Linux. It is required to install the Extra Packages Enterprise Linux (EPEL) repository and the PostgreSQL Global Development Group RPM repository beforehand.

Official RPM packages for Barman are distributed by EnterpriseDB via Yum through the public RPM repository, by following the instructions you find on that website.

Then, as root simply type:

In addition to the Barman packages available in the EDB and PGDG repositories, Barman RPMs published by the Fedora project can be found in EPEL. These RPMs are not maintained by the Barman developers and use a different configuration layout to the packages available in the PGDG and EDB repositories:

- EDB and PGDG packages use

/etc/barman.confas the main configuration file and/etc/barman.dfor additional configuration files. - The Fedora packages use

/etc/barman/barman.confas the main configuration file and/etc/barman/conf.dfor additional configuration files.

The difference in configuration file layout means that upgrades between the EPEL and non-EPEL Barman packages can break existing Barman installations until configuration files are manually updated. We therefore recommend that you use a single source repository for Barman packages. This can be achieved by adding the following line to the definition of the repositories from which you do not want to obtain Barman packages:

Specifically:

- To use only Barman packages from the EDB repositories, add the exclude directive from above to repository definitions in

/etc/yum.repos.d/epel.repoand/etc/yum.repos.d/pgdg-*.repo. - To use only Barman packages from the PGDG repositories, add the exclude directive from above to repository definitions in

/etc/yum.repos.d/epel.repoand/etc/yum.repos.d/enterprisedb*.repo. - To use only Barman packages from the EPEL repositories, add the exclude directive from above to repository definitions in

/etc/yum.repos.d/pgdg-*.repoand/etc/yum.repos.d/enterprisedb*.repo.

Installation on Debian/Ubuntu using packages

Barman can be installed on Debian and Ubuntu Linux systems using packages.

It is directly available in the official repository for Debian and Ubuntu, however, these repositories might not contain the latest available version. If you want to have the latest version of Barman, the recommended method is to install both these repositories:

- Public APT repository, directly maintained by Barman developers

- the PostgreSQL Community APT repository, by following instructions in the APT section of the PostgreSQL Wiki

NOTE: Thanks to the direct involvement of Barman developers in the PostgreSQL Community APT repository project, you will always have access to the most updated versions of Barman.

Installing Barman is as easy. As root user simply type:

Installation on SLES using packages

Barman can be installed on SLES systems using packages available in the PGDG SLES repositories. Install the necessary repository by following the instructions available on the PGDG site.

Supported SLES version: SLES 15 SP3.

Once the necessary repositories have been installed you can install Barman as the root user:

Installation from sources

WARNING: Manual installation of Barman from sources should only be performed by expert GNU/Linux users. Installing Barman this way requires system administration activities such as dependencies management,

barmanuser creation, configuration of thebarman.conffile, cron setup for thebarman croncommand, log management, and so on.

Create a system user called barman on the backup server. As barman user, download the sources and uncompress them.

For a system-wide installation, type:

barman@backup$ ./setup.py build

# run this command with root privileges or through sudo

barman@backup# ./setup.py installFor a local installation, type:

The barman application will be installed in your user directory (make sure that your PATH environment variable is set properly).

Barman is also available on the Python Package Index (PyPI) and can be installed through pip.

PostgreSQL client/server binaries

The following Barman features depend on PostgreSQL binaries:

- Streaming backup with

backup_method = postgres(requirespg_basebackup) - Streaming WAL archiving with

streaming_archiver = on(requirespg_receivewalorpg_receivexlog) - Verifying backups with

barman verify-backup(requirespg_verifybackup)

Depending on the target OS these binaries are installed with either the PostgreSQL client or server packages:

- On RedHat/CentOS and SLES:

- The

pg_basebackupandpg_receivewal/pg_receivexlogbinaries are installed with the PostgreSQL client packages. - The

pg_verifybackupbinary is installed with the PostgreSQL server packages. - All binaries are installed in

/usr/pgsql-${PG_MAJOR_VERSION}/bin.

- The

- On Debian/Ubuntu:

- All binaries are installed with the PostgreSQL client packages.

- The binaries are installed in

/usr/lib/postgresql/${PG_MAJOR_VERSION}/bin.

You must ensure that either:

- The Barman user has the

bindirectory for the appropriatePG_MAJOR_VERSIONon its path, or: - The path_prefix option is set in the Barman configuration for each server and points to the

bindirectory for the appropriatePG_MAJOR_VERSION.

The psql program is recommended in addition to the above binaries. While Barman does not use it directly the documentation provides examples of how it can be used to verify PostgreSQL connections are working as intended. The psql binary can be found in the PostgreSQL client packages.

Third party PostgreSQL variants

If you are using Barman for the backup and recovery of third-party PostgreSQL variants then you will need to check whether the PGDG client/server binaries described above are compatible with your variant. If they are incompatible then you will need to install compatible alternatives from appropriate packages.

Upgrading Barman

Barman follows the trunk-based development paradigm, and as such there is only one stable version, the latest. After every commit, Barman goes through thousands of automated tests for each supported PostgreSQL version and on each supported Linux distribution.

Also, every version is back compatible with previous ones. Therefore, upgrading Barman normally requires a simple update of packages using yum update or apt update.

There have been, however, the following exceptions in our development history, which required some small changes to the configuration.

Upgrading to Barman 3.0.0

Default backup approach for Rsync backups is now concurrent

Barman will now use concurrent backups if neither concurrent_backup nor exclusive_backup are specified in backup_options. This differs from previous Barman versions where the default was to use exclusive backup.

If you require exclusive backups you will now need to add exclusive_backup to backup_options in the Barman configuration.

Note that exclusive backups are not supported at all when running against PostgreSQL 15.

Metadata changes

A new field named compression will be added to the metadata stored in the backup.info file for all backups taken with version 3.0.0. This is used when recovering from backups taken using the built-in compression functionality of pg_basebackup.

The presence of this field means that earlier versions of Barman are not able to read backups taken with Barman 3.0.0. This means that if you downgrade from Barman 3.0.0 to an earlier version you will have to either manually remove any backups taken with 3.0.0 or edit the backup.info file of each backup to remove the compression field.

The same metadata change affects pg-backup-api so if you are using pg-backup-api you will need to update it to version 0.2.0.

Upgrading from Barman 2.10

If you are using barman-cloud-wal-archive or barman-cloud-backup you need to be aware that from version 2.11 all cloud utilities have been moved into the new barman-cli-cloud package. Therefore, you need to ensure that the barman-cli-cloud package is properly installed as part of the upgrade to the latest version. If you are not using the above tools, you can upgrade to the latest version as usual.

Upgrading from Barman 2.X (prior to 2.8)

Before upgrading from a version of Barman 2.7 or older users of rsync backup method on a primary server should explicitly set backup_options to either concurrent_backup (recommended for PostgreSQL 9.6 or higher) or exclusive_backup (current default), otherwise Barman emits a warning every time it runs.

Upgrading from Barman 1.X

If your Barman installation is 1.X, you need to explicitly configure the archiving strategy. Before, the file based archiver, controlled by archiver, was enabled by default.

Before you upgrade your Barman installation to the latest version, make sure you add the following line either globally or for any server that requires it:

Additionally, for a few releases, Barman will transparently set archiver = on with any server that has not explicitly set an archiving strategy and emit a warning.

Configuration

There are three types of configuration files in Barman:

- global/general configuration

- server configuration

- model configuration

The main configuration file (set to /etc/barman.conf by default) contains general options such as main directory, system user, log file, and so on.

Server configuration files, one for each server to be backed up by Barman, are located in the /etc/barman.d directory and must have a .conf suffix.

Similarly, model configuration files are located in the /etc/barman.d directory and must have a .conf suffix.

NOTE: models define a set of configuration overrides which can be applied on top of the configuration of Barman servers that are part of the same cluster as the model, through the barman config-switch command.

IMPORTANT: For historical reasons, you can still have one single configuration file containing both global as well as server and model options. However, for maintenance reasons, this approach is deprecated.

Configuration files in Barman follow the INI format.

Configuration files accept distinct types of parameters:

- string

- enum

- integer

- boolean,

on/true/1are accepted as well areoff/false/0.

None of them requires to be quoted.

NOTE: some

enumallowsoffbut notfalse.

Options scope

Every configuration option has a scope:

- global

- server

- model

- global/server: server options that can be generally set at global level

Global options are allowed in the general section, which is identified in the INI file by the [barman] label:

Server options can only be specified in a server section, which is identified by a line in the configuration file, in square brackets ([ and ]). The server section represents the ID of that server in Barman. The following example specifies a section for the server named pg, which belongs to the my-cluster cluster:

Model options can only be specified in a model section, which is identified the same way as a server section. There can be no conflicts among the identifier of server sections and model sections. The following example specifies a section for the model named pg:switchover, which belongs to the my-cluster cluster:

[pg:switchover]

cluster=my-cluster

model=true

; Configuration options for the model named 'pg:switchover', which belongs to

; the server which is configured with the option 'cluster=pg', go hereThere are two reserved words that cannot be used neither as server names nor as model names in Barman:

barman: identifier of the global sectionall: a handy shortcut that allows you to execute some commands on every server managed by Barman in sequence

Barman implements the convention over configuration design paradigm, which attempts to reduce the number of options that you are required to configure without losing flexibility. Therefore, some server options can be defined at global level and overridden at server level, allowing users to specify a generic behavior and refine it for one or more servers. These options have a global/server scope.

For a list of all the available configurations and their scope, please refer to section 5 of the 'man' page.

Examples of configuration

The following is a basic example of main configuration file:

[barman]

barman_user = barman

configuration_files_directory = /etc/barman.d

barman_home = /var/lib/barman

log_file = /var/log/barman/barman.log

log_level = INFO

compression = gzipThe example below, on the other hand, is a server configuration file that uses streaming backup:

[streaming-pg]

description = "Example of PostgreSQL Database (Streaming-Only)"

conninfo = host=pg user=barman dbname=postgres

streaming_conninfo = host=pg user=streaming_barman

backup_method = postgres

streaming_archiver = on

slot_name = barmanThe following example defines a configuration model with a set of overrides that can be applied to the server which cluster is streaming-pg:

[streaming-pg:switchover]

cluster=streaming-pg

model=true

conninfo = host=pg-2 user=barman dbname=postgres

streaming_conninfo = host=pg-2 user=streaming_barmanThe following code shows a basic example of traditional backup using rsync/SSH:

[ssh-pg]

description = "Example of PostgreSQL Database (via Ssh)"

ssh_command = ssh postgres@pg

conninfo = host=pg user=barman dbname=postgres

backup_method = rsync

parallel_jobs = 1

reuse_backup = link

archiver = onFor more detailed information, please refer to the distributed barman.conf file, as well as the ssh-server.conf-template and streaming-server.conf-template template files.

Setup of a new server in Barman

As mentioned in the "Design and architecture" section, we will use the following conventions:

pgas server ID and host name where PostgreSQL is installedbackupas host name where Barman is locatedbarmanas the user running Barman on thebackupserver (identified by the parameterbarman_userin the configuration)postgresas the user running PostgreSQL on thepgserver

IMPORTANT: a server in Barman must refer to the same PostgreSQL instance for the whole backup and recoverability history (i.e. the same system identifier). This means that if you perform an upgrade of the instance (using for example

pg_upgrade, you must not reuse the same server definition in Barman, rather use another one as they have nothing in common.

Preliminary steps

This section contains some preliminary steps that you need to undertake before setting up your PostgreSQL server in Barman.

IMPORTANT: Before you proceed, it is important that you have made your decision in terms of WAL archiving and backup strategies, as outlined in the "Design and architecture" section. In particular, you should decide which WAL archiving methods to use, as well as the backup method.

PostgreSQL connection

You need to make sure that the backup server can connect to the PostgreSQL server on pg as superuser or, that the correct set of privileges are granted to the user that connects to the database.

You can create a specific superuser in PostgreSQL, named barman, as follows:

Or create a normal user with the required set of privileges as follows:

GRANT EXECUTE ON FUNCTION pg_backup_start(text, boolean) to barman;

GRANT EXECUTE ON FUNCTION pg_backup_stop(boolean) to barman;

GRANT EXECUTE ON FUNCTION pg_switch_wal() to barman;

GRANT EXECUTE ON FUNCTION pg_create_restore_point(text) to barman;

GRANT pg_read_all_settings TO barman;

GRANT pg_read_all_stats TO barman;In the case of using PostgreSQL version 14 or a prior version, the functions pg_backup_start and pg_backup_stop had different names and different signatures. You will therefore need to replace the first two lines in the above block with:

GRANT EXECUTE ON FUNCTION pg_start_backup(text, boolean, boolean) to barman;

GRANT EXECUTE ON FUNCTION pg_stop_backup() to barman;

GRANT EXECUTE ON FUNCTION pg_stop_backup(boolean, boolean) to barman;It is worth noting that with PostgreSQL version 13 and below without a real superuser, the --force option of the barman switch-wal command will not work.

If you are running PostgreSQL version 15 or above, you can grant the pg_checkpoint role, so you can use this feature without a superuser:

IMPORTANT: The above

createusercommand will prompt for a password, which you are then advised to add to the~barman/.pgpassfile on thebackupserver. For further information, please refer to "The Password File" section in the PostgreSQL Documentation.

This connection is required by Barman in order to coordinate its activities with the server, as well as for monitoring purposes.

You can choose your favourite client authentication method among those offered by PostgreSQL. More information can be found in the "Client Authentication" section of the PostgreSQL Documentation.

Run the following command as the barman user on the backup host in order to verify that the backup host can connect to PostgreSQL on the pg host:

Write down the above information (user name, host name and database name) and keep it for later. You will need it with in the conninfo option for your server configuration, like in this example:

NOTE:

application_nameis optional.

PostgreSQL WAL archiving and replication

Before you proceed, you need to properly configure PostgreSQL on pg to accept streaming replication connections from the Barman server. Please read the following sections in the PostgreSQL documentation:

One configuration parameter that is crucially important is the wal_level parameter. This parameter must be configured to ensure that all the useful information necessary for a backup to be coherent are included in the transaction log file.

Restart the PostgreSQL server for the configuration to be refreshed.

PostgreSQL streaming connection

If you plan to use WAL streaming or streaming backup, you need to setup a streaming connection. We recommend creating a specific user in PostgreSQL, named streaming_barman, as follows:

IMPORTANT: The above command will prompt for a password, which you are then advised to add to the

~barman/.pgpassfile on thebackupserver. For further information, please refer to "The Password File" section in the PostgreSQL Documentation.

You can manually verify that the streaming connection works through the following command:

If the connection is working you should see a response containing the system identifier, current timeline ID and current WAL flush location, for example:

systemid | timeline | xlogpos | dbname

---------------------+----------+------------+--------

7139870358166741016 | 1 | 1/330000D8 |

(1 row)IMPORTANT: Please make sure you are able to connect via streaming replication before going any further.

You also need to configure the max_wal_senders parameter in the PostgreSQL configuration file. The number of WAL senders depends on the PostgreSQL architecture you have implemented. In this example, we are setting it to 2:

This option represents the maximum number of concurrent streaming connections that the server will be allowed to manage.

Another important parameter is max_replication_slots, which represents the maximum number of replication slots 5 that the server will be allowed to manage. This parameter is needed if you are planning to use the streaming connection to receive WAL files over the streaming connection:

The values proposed for max_replication_slots and max_wal_senders must be considered as examples, and the values you will use in your actual setup must be chosen after a careful evaluation of the architecture. Please consult the PostgreSQL documentation for guidelines and clarifications.

SSH connections

SSH is a protocol and a set of tools that allows you to open a remote shell to a remote server and copy files between the server and the local system. You can find more documentation about SSH usage in the article "SSH Essentials" by Digital Ocean.

SSH key exchange is a very common practice that is used to implement secure passwordless connections between users on different machines, and it's needed to use rsync for WAL archiving and for backups.

NOTE: This procedure is not needed if you plan to use the streaming connection only to archive transaction logs and backup your PostgreSQL server.

SSH configuration of postgres user

Unless you have done it before, you need to create an SSH key for the PostgreSQL user. Log in as postgres, in the pg host and type:

As this key must be used to connect from hosts without providing a password, no passphrase should be entered during the key pair creation.

SSH configuration of barman user

As in the previous paragraph, you need to create an SSH key for the Barman user. Log in as barman in the backup host and type:

For the same reason, no passphrase should be entered.

From PostgreSQL to Barman

The SSH connection from the PostgreSQL server to the backup server is needed to correctly archive WAL files using the archive_command setting.

To successfully connect from the PostgreSQL server to the backup server, the PostgreSQL public key has to be configured into the authorized keys of the backup server for the barman user.

The public key to be authorized is stored inside the postgres user home directory in a file named .ssh/id_rsa.pub, and its content should be included in a file named .ssh/authorized_keys inside the home directory of the barman user in the backup server. If the authorized_keys file doesn't exist, create it using 600 as permissions.

The following command should succeed without any output if the SSH key pair exchange has been completed successfully:

The value of the archive_command configuration parameter will be discussed in the "WAL archiving via archive_command section".

From Barman to PostgreSQL

The SSH connection between the backup server and the PostgreSQL server is used for the traditional backup over rsync. Just as with the connection from the PostgreSQL server to the backup server, we should authorize the public key of the backup server in the PostgreSQL server for the postgres user.

The content of the file .ssh/id_rsa.pub in the barman server should be put in the file named .ssh/authorized_keys in the PostgreSQL server. The permissions of that file should be 600.

The following command should succeed without any output if the key pair exchange has been completed successfully.

The server configuration file

Create a new file, called pg.conf, in /etc/barman.d directory, with the following content:

[pg]

description = "Our main PostgreSQL server"

conninfo = host=pg user=barman dbname=postgres

backup_method = postgres

# backup_method = rsyncThe conninfo option is set accordingly to the section "Preliminary steps: PostgreSQL connection".

The meaning of the backup_method option will be covered in the backup section of this guide.

If you plan to use the streaming connection for WAL archiving or to create a backup of your server, you also need a streaming_conninfo parameter in your server configuration file:

This value must be chosen accordingly as described in the section "Preliminary steps: PostgreSQL connection".

WAL streaming

Barman can reduce the Recovery Point Objective (RPO) by allowing users to add continuous WAL streaming from a PostgreSQL server, on top of the standard archive_command strategy.

Barman relies on pg_receivewal, it exploits the native streaming replication protocol and continuously receives transaction logs from a PostgreSQL server (master or standby). Prior to PostgreSQL 10, pg_receivewal was named pg_receivexlog.

IMPORTANT: Barman requires that

pg_receivewalis installed on the same server. It is recommended to install the latest available version ofpg_receivewal, as it is back compatible. Otherwise, users can install multiple versions ofpg_receivewalon the Barman server and properly point to the specific version for a server, using thepath_prefixoption in the configuration file.

In order to enable streaming of transaction logs, you need to:

- setup a streaming connection as previously described

- set the

streaming_archiveroption toon

The cron command, if the aforementioned requirements are met, transparently manages log streaming through the execution of the receive-wal command. This is the recommended scenario.

However, users can manually execute the receive-wal command:

NOTE: The

receive-walcommand is a foreground process.

Transaction logs are streamed directly in the directory specified by the streaming_wals_directory configuration option and are then archived by the archive-wal command.

Unless otherwise specified in the streaming_archiver_name parameter, Barman will set application_name of the WAL streamer process to barman_receive_wal, allowing you to monitor its status in the pg_stat_replication system view of the PostgreSQL server.

Replication slots

Replication slots are an automated way to ensure that the PostgreSQL server will not remove WAL files until they were received by all archivers. Barman uses this mechanism to receive the transaction logs from PostgreSQL.

You can find more information about replication slots in the PostgreSQL manual.

You can even base your backup architecture on streaming connection only. This scenario is useful to configure Docker-based PostgreSQL servers and even to work with PostgreSQL servers running on Windows.

IMPORTANT: At this moment, the Windows support is still experimental, as it is not yet part of our continuous integration system.

How to configure the WAL streaming

First, the PostgreSQL server must be configured to stream the transaction log files to the Barman server.

To configure the streaming connection from Barman to the PostgreSQL server you need to enable the streaming_archiver, as already said, including this line in the server configuration file:

If you plan to use replication slots (recommended), another essential option for the setup of the streaming-based transaction log archiving is the slot_name option:

This option defines the name of the replication slot that will be used by Barman. It is mandatory if you want to use replication slots.

When you configure the replication slot name, you can manually create a replication slot for Barman with this command:

barman@backup$ barman receive-wal --create-slot pg

Creating physical replication slot 'barman' on server 'pg'

Replication slot 'barman' createdStarting with Barman 2.10, you can configure Barman to automatically create the replication slot by setting:

Streaming WALs and backups from different hosts (Barman 3.10.0 and later)

Barman uses the connection info defined in streaming_conninfo when creating pg_receivewal processes to stream WAL segments and uses conninfo when checking the status of replication slots. Because conninfo and streaming_conninfo are also used when taking backups this default configuration forces Barman to stream WALs and take backups from the same host.

If an alternative configuration is required, such as backups being sourced from a standby with WALs being streamed from the primary, then this can be achieved using the following options:

wal_streaming_conninfo: A connection string which Barman will use instead ofstreaming_conninfowhen receiving WAL segments via the streaming replication protocol and when checking the status of the replication slot used for receiving WALs.wal_conninfo: An optional connection string specifically for monitoring WAL streaming status and performing related checks. If set, Barman will use this instead ofwal_streaming_conninfowhen checking the status of the replication slot.

The following restrictions apply and are enforced by Barman during checks:

- Connections defined by

wal_streaming_conninfoandwal_conninfomust reach a PostgreSQL instance which belongs to the same cluster reached by thestreaming_conninfoandconninfoconnections. - The

wal_streaming_conninfoconnection string must be able to create streaming replication connections. - Either

wal_streaming_conninfoorwal_conninfo(if it is set) must have sufficient permissions to read settings and check replication slot status. The required permissions are one of:- The

pg_monitorrole. - Both the

pg_read_all_settingsandpg_read_all_statsroles. - The

superuserrole.

- The

IMPORTANT: While it is possible to stream WALs from any PostgreSQL instance in a cluster there is a risk that WAL segments can be lost when streaming WALs from a standby, if such a standby is unable to keep up with its own upstream source. For this reason it is strongly recommended that WALs are always streamed directly from the primary.

Limitations of partial WAL files with recovery

The standard behaviour of pg_receivewal is to write transactional information in a file with .partial suffix after the WAL segment name.

Barman expects a partial file to be in the streaming_wals_directory of a server. When completed, pg_receivewal removes the .partial suffix and opens the following one, delivering the file to the archive-wal command of Barman for permanent storage and compression.

In case of a sudden and unrecoverable failure of the master PostgreSQL server, the .partial file that has been streamed to Barman contains very important information that the standard archiver (through PostgreSQL's archive_command) has not been able to deliver to Barman.

As of Barman 2.10, the get-wal command is able to return the content of the current .partial WAL file through the --partial/-P option. This is particularly useful in the case of recovery, both full or to a point in time. Therefore, in case you run a recover command with get-wal enabled, and without --standby-mode, Barman will automatically add the -P option to barman-wal-restore (which will then relay that to the remote get-wal command) in the restore_command recovery option.

get-wal will also search in the incoming directory, in case a WAL file has already been shipped to Barman, but not yet archived.

WAL archiving via archive_command

The archive_command is the traditional method to archive WAL files.

The value of this PostgreSQL configuration parameter must be a shell command to be executed by the PostgreSQL server to copy the WAL files to the Barman incoming directory.

This can be done in two ways, both requiring a SSH connection:

- via

barman-wal-archiveutility (from Barman 2.6) - via rsync/SSH (common approach before Barman 2.6)

See sections below for more details.

IMPORTANT: Read the "Concurrent Backup and backup from a standby" section for more detailed information on how Barman supports this feature.

WAL archiving via barman-wal-archive

From Barman 2.6, the recommended way to safely and reliably archive WAL files to Barman via archive_command is to use the barman-wal-archive command contained in the barman-cli package, distributed via EnterpriseDB public repositories and available under GNU GPL 3 licence. barman-cli must be installed on each PostgreSQL server that is part of the Barman cluster.

Using barman-wal-archive instead of rsync/SSH reduces the risk of data corruption of the shipped WAL file on the Barman server. When using rsync/SSH as archive_command a WAL file, there is no mechanism that guarantees that the content of the file is flushed and fsync-ed to disk on destination.

For this reason, we have developed the barman-wal-archive utility that natively communicates with Barman's put-wal command (introduced in 2.6), which is responsible to receive the file, fsync its content and place it in the proper incoming directory for that server. Therefore, barman-wal-archive reduces the risk of copying a WAL file in the wrong location/directory in Barman, as the only parameter to be used in the archive_command is the server's ID.

For more information on the barman-wal-archive command, type man barman-wal-archive on the PostgreSQL server.

You can check that barman-wal-archive can connect to the Barman server, and that the required PostgreSQL server is configured in Barman to accept incoming WAL files with the following command:

Where backup is the host where Barman is installed, pg is the name of the PostgreSQL server as configured in Barman and DUMMY is a placeholder (barman-wal-archive requires an argument for the WAL file name, which is ignored).

If everything is configured correctly you should see the following output:

Since it uses SSH to communicate with the Barman server, SSH key authentication is required for the postgres user to login as barman on the backup server. If a port other than the SSH default of 22 should be used then the --port option can be added to specify the port that should be used for the SSH connection.

Edit the postgresql.conf file of the PostgreSQL instance on the pg database, activate the archive mode and set archive_command to use barman-wal-archive:

Then restart the PostgreSQL server.

WAL archiving via rsync/SSH

You can retrieve the incoming WALs directory using the show-servers Barman command and looking for the incoming_wals_directory value:

barman@backup$ barman show-servers pg |grep incoming_wals_directory

incoming_wals_directory: /var/lib/barman/pg/incomingEdit the postgresql.conf file of the PostgreSQL instance on the pg database and activate the archive mode:

archive_mode = on

wal_level = 'replica'

archive_command = 'rsync -a %p barman@backup:INCOMING_WALS_DIRECTORY/%f'Make sure you change the INCOMING_WALS_DIRECTORY placeholder with the value returned by the barman show-servers pg command above.

Restart the PostgreSQL server.

In some cases, you might want to add stricter checks to the archive_command process. For example, some users have suggested the following one:

archive_command = 'test $(/bin/hostname --fqdn) = HOSTNAME \

&& rsync -a %p barman@backup:INCOMING_WALS_DIRECTORY/%f'Where the HOSTNAME placeholder should be replaced with the value returned by hostname --fqdn. This trick is a safeguard in case the server is cloned and avoids receiving WAL files from recovered PostgreSQL instances.

Verification of WAL archiving configuration

In order to test that continuous archiving is on and properly working, you need to check both the PostgreSQL server and the backup server. In particular, you need to check that WAL files are correctly collected in the destination directory.

For this purpose and to facilitate the verification of the WAL archiving process, the switch-wal command has been developed:

The above command will force PostgreSQL to switch WAL file and trigger the archiving process in Barman. Barman will wait for one file to arrive within 30 seconds (you can change the timeout through the --archive-timeout option). If no WAL file is received, an error is returned.

You can verify if the WAL archiving has been correctly configured using the barman check command.

Streaming backup

Barman can backup a PostgreSQL server using the streaming connection, relying on pg_basebackup. Since version 3.11, Barman also supports block-level incremental backups using the streaming connection, for more information consult the "Features in detail" section.

IMPORTANT: Barman requires that

pg_basebackupis installed in the same server. It is recommended to install the last available version ofpg_basebackup, as it is backwards compatible. You can even install multiple versions ofpg_basebackupon the Barman server and properly point to the specific version for a server, using thepath_prefixoption in the configuration file.

To successfully backup your server with the streaming connection, you need to use postgres as your backup method:

IMPORTANT: You will not be able to start a backup if WAL is not being correctly archived to Barman, either through the

archiveror thestreaming_archiver

To check if the server configuration is valid you can use the barman check command:

To start a backup you can use the barman backup command:

Backup with rsync/SSH

The backup over rsync was the only method for backups in Barman before version 2.0, and before 3.11 it was the only method that supported incremental backups. Current Barman supports file-level as well as block-level incremental backups. Backups using rsync implements the file-level backup feature. Please consult the "Features in detail" section for more information.

To take a backup using rsync you need to put these parameters inside the Barman server configuration file:

The backup_method option activates the rsync backup method, and the ssh_command option is needed to correctly create an SSH connection from the Barman server to the PostgreSQL server.

IMPORTANT: You will not be able to start a backup if WAL is not being correctly archived to Barman, either through the

archiveror thestreaming_archiver

To check if the server configuration is valid you can use the barman check command:

To take a backup use the barman backup command:

NOTE: Starting with Barman 3.11.0, Barman uses a keep-alive mechanism when taking rsync-based backups. It keeps sending a simple

SELECT 1query over the libpq connection where Barman runspg_backup_start/pg_backup_stoplow-level API functions, and it's in place to reduce the probability of a firewall or a router dropping that connection as it can be idle for a long time while the base backup is being copied. You can control the interval of the hearbeats, or even disable the mechanism, through thekeepalive_intervalconfiguration option.

Backup with cloud snapshots

Barman is able to create backups of PostgreSQL servers deployed within certain cloud environments by taking snapshots of storage volumes. When configured in this manner the physical backups of PostgreSQL files are volume snapshots stored in the cloud while Barman acts as a storage server for WALs and the backup catalog. These backups can then be managed by Barman just like traditional backups taken with the rsync or postgres backup methods even though the backup data itself is stored in the cloud.

It is also possible to create snapshot backups without a Barman server using the barman-cloud-backup command directly on a suitable PostgreSQL server.

Prerequisites for cloud snapshots

In order to use the snapshot backup method with Barman, deployments must meet the following prerequisites:

- PostgreSQL must be deployed on a compute instance within a supported cloud provider.

- PostgreSQL must be configured such that all critical data, such as PGDATA and any tablespace data, is stored on storage volumes which support snapshots.

- The

findmntcommand must be available on the PostgreSQL host.

IMPORTANT: Any configuration files stored outside of PGDATA will not be included in the snapshots. The management of such files must be carried out using another mechanism such as a configuration management system.

Google Cloud Platform snapshot prerequisites

The google-cloud-compute and grpcio libraries must be available to the Python distribution used by Barman. These libraries are an optional dependency and are not installed as standard by any of the Barman packages. They can be installed as follows using pip:

NOTE: The minimum version of Python required by the google-cloud-compute library is 3.7. GCP snapshots cannot be used with earlier versions of Python.

The following additional prerequisites apply to snapshot backups on Google Cloud Platform:

- All disks included in the snapshot backup must be zonal persistent disks. Regional persistent disks are not currently supported.

- A service account with the required set of permissions must be available to Barman. This can be achieved by attaching such an account to the compute instance running Barman (recommended) or by using the

GOOGLE_APPLICATION_CREDENTIALSenvironment variable to point to a credentials file.

The required permissions are:

compute.disks.createSnapshotcompute.disks.getcompute.globalOperations.getcompute.instances.getcompute.snapshots.createcompute.snapshots.deletecompute.snapshots.list

Azure snapshot prerequisites

The azure-mgmt-compute and azure-identity libraries must be available to the Python distribution used by Barman.

These libraries are an optional dependency and are not installed as standard by any of the Barman packages. They can be installed as follows using pip:

NOTE: The minimum version of Python required by the azure-mgmt-compute library is 3.7. Azure snapshots cannot be used with earlier versions of Python.

The following additional prerequisites apply to snapshot backups on Azure:

- All disks included in the snapshot backup must be managed disks which are attached to the VM instance as data disks.

- Barman must be able to use a credential obtained either using managed identity or CLI login and this must grant access to Azure with the required set of permissions.

The following permissions are required:

Microsoft.Compute/disks/readMicrosoft.Compute/virtualMachines/readMicrosoft.Compute/snapshots/readMicrosoft.Compute/snapshots/writeMicrosoft.Compute/snapshots/delete

AWS snapshot prerequisites

The boto3 library must be available to the Python distribution used by Barman.

This library is an optional dependency and not installed as standard by any of the Barman packages. It can be installed as follows using pip:

The following additional prerequisites apply to snapshot backups on AWS:

- All disks included in the snapshot backup must be non-root EBS volumes and must be attached to the same VM instance.

- NVMe volumes are not currently supported.

The following permissions are required:

ec2:CreateSnapshotec2:CreateTagsec2:DeleteSnapshotec2:DescribeSnapshotsec2:DescribeInstancesec2:DescribeVolumes

Configuration for snapshot backups

To configure Barman for backup via cloud snapshots, set the backup_method parameter to snapshot and set snapshot_provider to a supported cloud provider:

Currently Google Cloud Platform (gcp), Microsoft Azure (azure) and AWS (aws) are supported.

The following parameters must be set regardless of cloud provider:

Where snapshot_instance is set to the name of the VM or compute instance where the storage volumes are attached and snapshot_disks is a comma-separated list of the disks which should be included in the backup.

IMPORTANT: You must ensure that

snapshot_disksincludes every disk which stores data required by PostgreSQL. Any data which is not stored on a storage volume listed insnapshot_diskswill not be included in the backup and therefore will not be available at recovery time.

Configuration for Google Cloud Platform snapshots

The following additional parameters must be set when using GCP:

gcp_project should be set to the ID of the GCP project which owns the instance and storage volumes defined by snapshot_instance and snapshot_disks. gcp_zone should be set to the availability zone in which the instance is located.

Configuration for Azure snapshots

The following additional parameters must be set when using Azure:

azure_subscription_id should be set to the ID of the Azure subscription ID which owns the instance and storage volumes defined by snapshot_instance and snapshot_disks. azure_resource_group should be set to the resource group to which the instance and disks belong.

Configuration for AWS snapshots

When specifying snapshot_instance or snapshot_disks, Barman will accept either the instance/volume ID which was assigned to the resource by AWS or a name. If a name is used then Barman will query AWS to find resources with a matching Name tag. If zero or multiple matching resources are found then Barman will exit with an error.

The following optional parameters can be set when using AWS:

aws_region = AWS_REGION

aws_profile = AWS_PROFILE_NAME

aws_await_snapshots_timeout = TIMEOUT_IN_SECONDSIf aws_profile is used it should be set to the name of a section in the AWS credentials file. If aws_profile is not used then the default profile will be used. If no credentials file exists then credentials will be sourced from the environment.

If aws_region is specified it will override any region that may be defined in the AWS profile.

If aws_await_snapshots_timeout is not set, the default of 3600 seconds will be used.

Taking a snapshot backup

Once the configuration options are set and appropriate credentials are available to Barman, backups can be taken using the barman backup command.

Barman will validate the configuration parameters for snapshot backups during the barman check command and also when starting a backup.

Note that the following arguments / config variables are unavailable when using backup_method = snapshot:

| Command argument | Config variable |

|---|---|

| N/A | backup_compression |

--bwlimit |

bandwidth_limit |

--jobs |

parallel_jobs |

| N/A | network_compression |

--reuse-backup |

reuse_backup |

For a more in-depth discussion of snapshot backups, including considerations around management and recovery of snapshot backups, see the cloud snapshots section in feature details.

How to setup a Windows based server

You can backup a PostgreSQL server running on Windows using the streaming connection for both WAL archiving and for backups.

IMPORTANT: This feature is still experimental because it is not yet part of our continuous integration system.

Follow every step discussed previously for a streaming connection setup.

WARNING:: At this moment,

pg_basebackupinteroperability from Windows to Linux is still experimental. If you are having issues taking a backup from a Windows server and your PostgreSQL locale is not in English, a possible workaround for the issue is instructing your PostgreSQL to emit messages in English. You can do this by putting the following parameter in yourpostgresql.conffile:This has been reported to fix the issue.

You can backup your server as usual.

Remote recovery is not supported for Windows servers, so you must recover your cluster locally in the Barman server and then copy all the files on a Windows server or use a folder shared between the PostgreSQL server and the Barman server.

Additionally, make sure that the system user chosen to run PostgreSQL has the permission needed to access the restored data. Basically, it must have full control over the PostgreSQL data directory.

General commands

Barman has many commands and, for the sake of exposition, we can organize them by scope.

The scope of the general commands is the entire Barman server, that can backup many PostgreSQL servers. Server commands, instead, act only on a specified server. Backup commands work on a backup, which is taken from a certain server.

The following list includes the general commands.

cron

barman doesn't include a long-running daemon or service file (there's nothing to systemctl start, service start, etc.). Instead, the barman cron subcommand is provided to perform barman's background "steady-state" backup operations.

You can perform maintenance operations, on both WAL files and backups, using the cron command:

NOTE: This command should be executed in a cron script. Our recommendation is to schedule

barman cronto run every minute. If you installed Barman using the rpm or debian package, a cron entry running on every minute will be created for you.

barman cron executes WAL archiving operations concurrently on a server basis, and this also enforces retention policies on those servers that have:

retention_policynot empty and valid;retention_policy_modeset toauto.

The cron command ensures that WAL streaming is started for those servers that have requested it, by transparently executing the receive-wal command.

In order to stop the operations started by the cron command, comment out the cron entry and execute:

You might want to check barman list-servers to make sure you get all of your servers.

NOTE:

barman cronruns background maintenance tasks only and is not responsible for running scheduled backups. Any regularly scheduled backup jobs you require must be scheduled separately, for example in another cron entry which runsbarman backup all.

diagnose

The diagnose command creates a JSON report useful for diagnostic and support purposes. This report contains information for all configured servers.

NOTE: From Barman

3.10.0onwards you can optionally specify the--show-config-sourceargument to the command. In that case, for each configuration option of Barman and of the Barman servers, the output will include not only the configuration value, but also the configuration file which provides the effective value.

IMPORTANT: Even if the diagnose is written in JSON and that format is thought to be machine readable, its structure is not to be considered part of the interface. Format can change between different Barman versions.

list-servers

You can display the list of active servers that have been configured for your backup system with:

A machine readable output can be obtained with the --minimal option:

Server commands

As we said in the previous section, server commands work directly on a PostgreSQL server or on its area in Barman, and are useful to check its status, perform maintenance operations, take backups, and manage the WAL archive.

archive-wal

The archive-wal command execute maintenance operations on WAL files for a given server. This operations include processing of the WAL files received from the streaming connection or from the archive_command or both.

IMPORTANT: The

archive-walcommand, even if it can be directly invoked, is designed to be started from thecrongeneral command.

backup

The backup command takes a full backup (base backup) of the given servers. It has several options that let you override the corresponding configuration parameter for the new backup. For more information, consult the manual page.

You can perform a full backup for a given server with:

TIP: You can use

barman backup allto sequentially backup all your configured servers.

TIP: You can use

barman backup <server_1_name> <server_2_name>to sequentially backup both<server_1_name>and<server_2_name>servers.

For information on how to take incremental backups in Barman, please check the incremental backup section.

Barman 2.10 introduces the -w/--wait option for the backup command. When set, Barman temporarily saves the state of the backup to WAITING_FOR_WALS, then waits for all the required WAL files to be archived before setting the state to DONE and proceeding with post-backup hook scripts. If the --wait-timeout option is provided, Barman will stop waiting for WAL files after the specified number of seconds, and the state will remain in WAITING_FOR_WALS.The cron command will continue to check that missing WAL files are archived, then label the backup as DONE.

check

You can check the connection to a given server and the configuration coherence with the check command:

TIP: You can use

barman check allto check all your configured servers.

IMPORTANT: The

checkcommand is probably the most critical feature that Barman implements. We recommend to integrate it with your alerting and monitoring infrastructure. The--nagiosoption allows you to easily create a plugin for Nagios/Icinga.

config-update

The config-update command is used to create or update configuration of servers and models in Barman

The syntax for running config-update command is:

json_changes should be a JSON string containing an array of documents. Each document must contain the following key:

scope: eitherserverormodel, depending on if you want to create or update a Barman server or a Barman model;

They must also contain either of the following keys, depending on value of scope:

server_name: ifscopeisserver, you should fill this key with the Barman server name;model_name: ifscopeismodel, you should fill this key with the Barman model name.

Besides these, you should fill each document with one or more Barman configuration options along with the desired values for them.

This is an example for updating the Barman server my_server with archiver=on and streaming_archiver=off:

barman config-update \

‘[{“scope”: “server”, “server_name”: “my_server”, “archiver”: “on”, “streaming_archiver”: “off”}]’NOTE:

barman config-updatecommand writes the configuration options to a file named.barman.auto.conf, which is created under thebarman_home. That configuration file takes higher precedence and overrides values coming from the Barman global configuration file (typically/etc/barman.conf) and from included files as perconfiguration_files_directory(typically files in/etc/barman.d). Keep that in mind if you later, for any reason, decide to manually change configuration options in those files..

config-switch

The config-switch command is used to apply a set of configuration overrides defined through a model to a Barman server. The final configuration of the Barman server is composed of the configuration of the server plus the overrides applied by the selected model. Models are particularly useful for clustered environments, so you can create different configuration models which can be used in response to failover events, for example.

The syntax for applying a model through config-switch command is:

NOTE: the command will only succeed if

<model_name>exists and belongs to the sameclusteras<server_name>.

NOTE: there can be at most one model active at a time. If you run the command twice with different models, only the overrides defined for the last one apply.

The syntax for unapplying an existing active model for a server is:

It will take care of unapplying the overrides that were previously in place by some active model.

NOTE: this command can also be useful for recovering from a specific situation: when you have a server with an active model which was previously configured but which no longer exists in your configuration.

generate-manifest

This command is useful when backup is created remotely and pg_basebackup is not involved and backup_manifest file does not exist in backup. It will generate backup_manifest file from backup_id using backup in barman server. If the file already exist, generation command will abort.

Command example:

Either backup_id backup id shortcuts can be used.

This command can also be used as post_backup hook script as follows:

get-wal

Barman allows users to request any xlog file from its WAL archive through the get-wal command:

If the requested WAL file is found in the server archive, the uncompressed content will be returned to STDOUT, unless otherwise specified.

The following options are available for the get-wal command:

-oallows users to specify a destination directory where Barman will deposit the requested WAL file-jwill compress the output usingbzip2algorithm-xwill compress the output usinggzipalgorithm-p SIZEpeeks from the archive up to WAL files, starting from the requested file

It is possible to use get-wal during a recovery operation, transforming the Barman server into a WAL hub for your servers. This can be automatically achieved by adding the get-wal value to the recovery_options global/server configuration option:

recovery_options is a global/server option that accepts a list of comma separated values. If the keyword get-wal is present during a recovery operation, Barman will prepare the recovery configuration by setting the restore_command so that barman get-wal is used to fetch the required WAL files. Similarly, one can use the --get-wal option for the recover command at run-time.

If get-wal is set in recovery_options but not required during a recovery operation then the --no-get-wal option can be used with the recover command to disable the get-wal recovery option.

This is an example of a restore_command for a local recovery:

Please note that the get-wal command should always be invoked as barman user, and that it requires the correct permission to read the WAL files from the catalog. This is the reason why we are using sudo -u barman in the example.

Setting recovery_options to get-wal for a remote recovery will instead generate a restore_command using the barman-wal-restore script. barman-wal-restore is a more resilient shell script which manages SSH connection errors.

This script has many useful options such as the automatic compression and decompression of the WAL files and the peek feature, which allows you to retrieve the next WAL files while PostgreSQL is applying one of them. It is an excellent way to optimise the bandwidth usage between PostgreSQL and Barman.

barman-wal-restore is available in the barman-cli package.

This is an example of a restore_command for a remote recovery:

Since it uses SSH to communicate with the Barman server, SSH key authentication is required for the postgres user to login as barman on the backup server. If a port other than the SSH default of 22 should be used then the --port option can be added to specify the port that should be used for the SSH connection.

You can check that barman-wal-restore can connect to the Barman server, and that the required PostgreSQL server is configured in Barman to send WAL files with the following command:

Where backup is the host where Barman is installed, pg is the name of the PostgreSQL server as configured in Barman and DUMMY is a placeholder (barman-wal-restore requires two argument for the WAL file name and destination directory, which are ignored).

If everything is configured correctly you should see the following output:

For more information on the barman-wal-restore command, type man barman-wal-restore on the PostgreSQL server.

list-backups

You can list the catalog of available backups for a given server with:

TIP: You can request a full list of the backups of all servers using

allas the server name.

To get a machine-readable output you can use the --minimal option, and to get the output in JSON format you can use the --format=json option.

rebuild-xlogdb

At any time, you can regenerate the content of the WAL archive for a specific server (or every server, using the all shortcut). The WAL archive is contained in the xlog.db file and every server managed by Barman has its own copy.

The xlog.db file can be rebuilt with the rebuild-xlogdb command. This will scan all the archived WAL files and regenerate the metadata for the archive.

For example:

receive-wal

This command manages the receive-wal process, which uses the streaming protocol to receive WAL files from the PostgreSQL streaming connection.

receive-wal process management

If the command is run without options, a receive-wal process will be started. This command is based on the pg_receivewal PostgreSQL command.

NOTE: The

receive-walcommand is a foreground process.

If the command is run with the --stop option, the currently running receive-wal process will be stopped.